- Zainab Siddiqui

- 0

Recurrent Neural Networks: Knowing Basics and Applications

Recurrent Neural Networks (RNNs) are a type of neural network commonly used in Natural Language Processing (NLP) and speech recognition. RNNs have loops that allow information to persist and be fed back into the network. This allows them to process sequences of inputs such as text, speech, or time series data.

Zainab Siddiqui

March 15, 2023 – 4 min read

Neural Networks are highly complicated artificial intelligence algorithms inspired by the human brain. Their architecture consists of artificial neurons that function just like biological neurons. Thus, they replicate the behavior and cognitive abilities of human minds.

Depending upon the purpose or use case, you will come across various types of neural networks. The three most commonly used neural networks are Feed Forward Neural Networks, Recurrent Neural Networks (RNN), and Convolutional Neural Networks (CNN).

In this article, we will focus on Recurrent Neural Networks (RNNs) and learn about them in detail.

What is a Recurrent Neural Network?

Recurrent Neural Networks (RNNs) are an extremely powerful machine learning technique capable of memorizing the previous hidden state. It utilizes a concept known as sequential memory to make predictions or classifications.

Traditional neural networks assume that the input and output are independent of each other. But, RNNs consider the sequence of inputs to determine the output.

It is important to note that RNNs are primarily leveraged while working with sequential data. Now, what is sequential data? Whenever the points in a dataset depend on other points in the dataset, it is sequential data. For example, time series data of stock prices, documents, audio clips, video clips, etc.

In simple words, RNNs are employed when the order of data is significant for analysis. This is one characteristic that differentiates them from other neural networks.

Besides this, RNNs share the same weights and parameters across each layer. This is in contrast to Feed Forward Networks that have different weights across each node.

Sharing parameters reduces the training time and significantly decreases the number of times weights have to be adjusted during backpropagation. This is another exceptional quality of recurrent neural networks.

What are the different types of Recurrent Neural Networks?

Unlike Feed forward networks that take one input and give one output, Recurrent Neural Networks can map inputs and outputs of varying dimensions.

Depending upon the lengths and sizes of the input and the output, RNNs are of 4 types:

- One to One

- One to Many

- Many to One

- Many to Many

How do Recurrent Neural Networks work?

The working of a Recurrent Neural Network is unique because of a loop that cycles the data. It produces the output, copies it, and loops it back into the network. Thus, the loop helps the algorithm to consider the previous inputs with the current input.

Let’s understand this with the help of an example. Suppose you input the sentence, ‘Tomatoes.’ to a simple neural network and process it character by character. By the time it reaches the character ‘a’, it forgets the previous characters (T, o, m). So, it will not be able to make predictions about the next characters.

However, if you feed it into an RNN, it would have the previous words (T, o, m) in its sequential memory. So, predicting the next character, ‘t’ would be relatively easy.

In a nutshell, RNNs combine the recent past and the present to determine the future. This is not achievable with the other neural networks.

How are Recurrent Neural Networks trained?

There are 3 major steps involved in training an RNN model:

1. Passing the entire data through the network (forward pass) and making a prediction

2. Comparing prediction with ground truth using a loss function and getting an error

3. Using error to do backpropagation, finding gradients at each node, and adjusting the assigned weights

RNNs leverage a unique backpropagation through time (BPTT) algorithm to adjust weights and calculate gradients. The training process continues until the gradient vanishes or shrinks exponentially.

BPTT is different from traditional backpropagation algorithms as it is specific to sequence data. It trains itself by calculating errors from its output layer to its input layer and sums errors at each time step.

However, BPTT can be computationally expensive if the number of timesteps is fairly large.

What are the common problems of Recurrent Neural Networks?

Following the training and backpropagation process, RNNs tend to run into two major problems. First, Exploding Gradients and second, Vanishing Gradients. Both of them are linked to the size of the gradients.

A gradient refers to the slope of the error function along the error curve. It shows the relative change in the output of RNN with a minor change in its input.

Vanishing Gradients occur when the gradient is too small and continues to become smaller. The weight parameters are updated until they become insignificant. At this point, the model stops learning. To solve this issue, LSTM(Long short-term memory) is used.

Exploding Gradients occur when the gradient is too large and continues to grow larger. The weights are given exceptionally high importance, leading to an unstable model. In this case, the weights are represented as NaN values. To overcome this issue, the complexity (hidden layers) of RNN needs to be reduced.

What are the different RNN architectures used?

In practical scenarios, variant RNN architectures are used to overcome the limitations of simple RNNs.

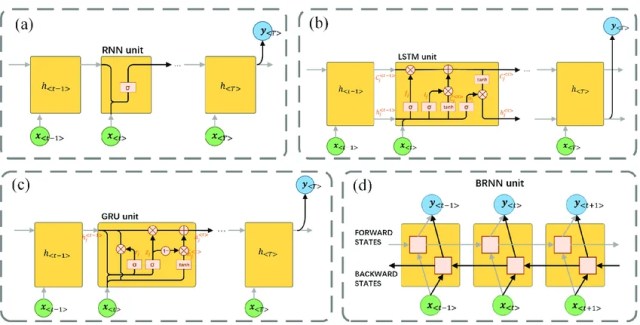

Bidirectional recurrent neural networks (BRNN): Bidirectional RNNs are different from unidirectional RNNs as they consider both previous and future inputs to make predictions about the current state. For example, the model knows the first few and last few words of a sentence. Then, it can predict the middle words easily.

Long short-term memory (LSTM): LSTMs are a solution to the vanishing gradient problem as discussed earlier. The architecture uses three gates named as input, output, and forget. These gates determine which information to retain and which information to forget. So, the network remembers the context for a long time.

Gated recurrent units (GRUs): GRUs are similar to LSTMs and have two gates to regulate the flow of information. The gates are called reset and update. They determine which information should be retained for future predictions.

What are some applications of Recurrent Neural Networks?

RNNs are widely used for NLP tasks including document summarization, speech recognition, language translation, autocomplete, etc. Besides this, they are also used for music generation, image generation, etc.

Broadly, the applications of Recurrent Neural Networks are categorized into 3 types:

- Sequence Classification: Examples are sentiment classification, video clip classification, etc.

- Sequence Labelling: Examples are involves part of speech tagging (noun, verb, pronoun, etc.), named entity recognition, and image captioning.

- Sequence Generation: Examples are machine translation, autocomplete, music generation, text summarization, chatbots, etc.

Want to build and scale your business with AI technologies?

You’re at the right place. Anubrain is a pioneer in the development of AI technologies and neural network-based solutions. If you wish to apply advanced natural language processing and deep learning techniques to your systems, reach out to us today. We will help you seamlessly adopt and implement AI!

The world is getting accustomed to increasing digital usage and generating tons of data daily. And there’s a lot that can be done with data. So, you’d find me experimenting with different datasets most of the time, besides raising my 1-year-old daughter and writing some blogs!

What to read next

How Generative AI is Changing Real-World Industries: What Is It?

How Generative AI is Changing Real-World Industries: What Is It? You all already know about...

AI in Web Applications: How It Enhances The Online World

AI in Web Applications: How It Enhances The Online World The present day web applications...

Machine Learning in Medical Imaging: Powering Diagnosis

Machine Learning in Medical Imaging: Powering Diagnosis Machine learning is changing how we look at...